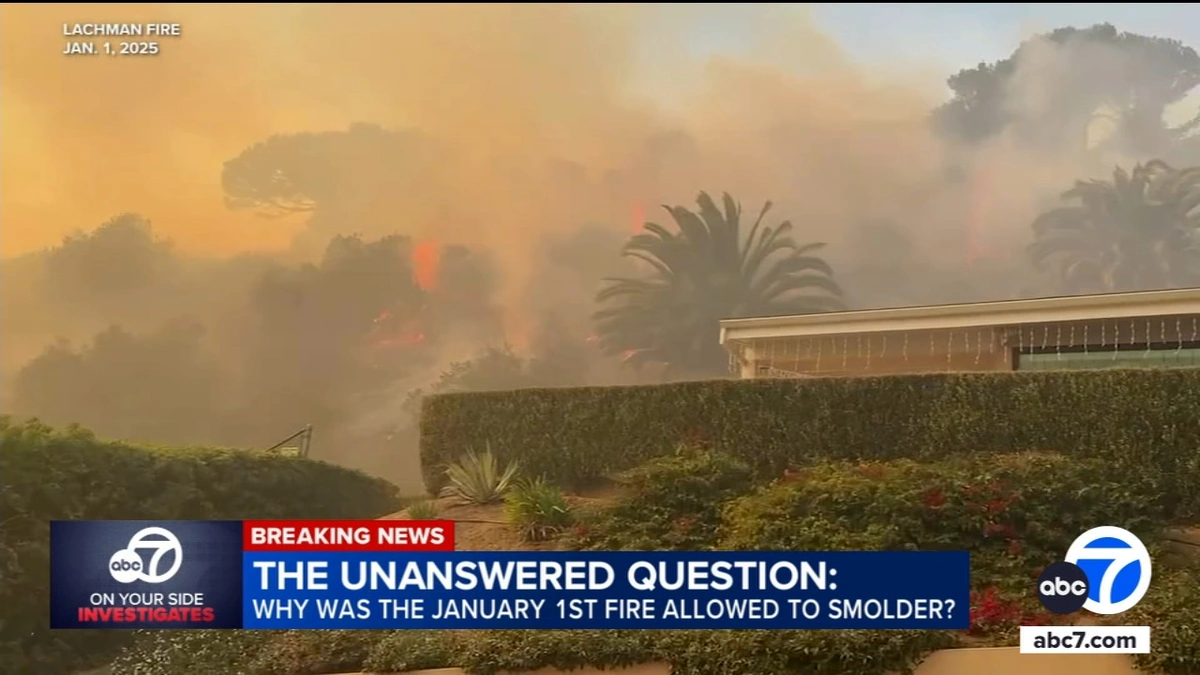

Okay, let’s be real. You’ve probably heard about the Palisades Fire. Devastating, right? But what if I told you the story had a twist wilder than a rollercoaster designed by a caffeinated chimpanzee? We’re talking about a Florida man…and…wait for it…ChatGPT.

I know, I know. It sounds like the start of a bad joke. But this is 2024, folks, where reality is often stranger than fiction. So, buckle up, because we’re diving deep into this bizarre saga.

How Did a Florida Man Get Involved in the Palisades Fire? (The “Why” Angle)

Here’s the thing: the initial reports were… well, they were confusing. News outlets were buzzing about a suspect linked to the Palisades Fire, and the name being thrown around was, indeed, associated with the Sunshine State. But the ChatGPT angle? That’s where things get really interesting, and where the “why” of this story takes center stage. It’s not as simple as “Florida man uses ChatGPT to start a fire.” That’s way too straightforward for this decade.

What fascinates me is the potential misuse – or perhaps, unintended consequences – of AI. We’re increasingly reliant on these tools, but are we truly aware of their limitations and the potential for manipulation? Let me rephrase that for clarity: Could someone misuse an AI to spread misinformation or even cover their tracks after a crime? The implications are staggering. We’re not just talking about a wildfire; we’re talking about the potential for AI to be woven into the fabric of criminal activity.

And, yes, you are right – the Palisades Fire was a big deal. It threatened homes, disrupted lives, and caused significant environmental damage. Now, imagine adding another layer of complexity: the suggestion that AI may have played a role, even indirectly. The authorities were on it, and rightly so. It added complexity to the situation and fire investigation.

The ChatGPT Connection | Separating Fact from Fiction

Okay, so how exactly did ChatGPT enter the chat? (Pun intended, sorry, not sorry). Early speculation suggested that the suspect may have used the AI chatbot to research arson techniques or even to generate alibis. This is, at this point, speculation. However, it raises serious questions about the responsible use of AI.

Let’s be honest, anyone can ask ChatGPT almost anything. The AI itself doesn’t have malicious intent. The danger lies in how individuals choose to utilize the information it provides. Think about it: someone could query ChatGPT for information on creating diversions, concealing evidence, or even understanding the vulnerabilities of fire detection systems. The AI itself isn’t setting the fire; it’s just a tool, like a hammer. The person swinging the hammer bears the responsibility. I initially thought this was straightforward, but then I realized the subtle ways AI could be used to obfuscate guilt.

The Broader Implications for AI and Criminal Justice

This is where things get really interesting. This incident – regardless of the ultimate truth – throws a spotlight on the growing intersection of artificial intelligence and criminal justice. Law enforcement agencies are already grappling with the challenges of digital evidence and cybercrime. The potential for AI to be used in criminal activities adds another layer of complexity.

What fascinates me is this: are our legal systems ready for this? Do investigators have the tools and training to effectively trace AI-related crimes? And, perhaps even more importantly, how do we ensure that AI is used ethically and responsibly, both by law enforcement and the general public? These are questions that need answers. A common mistake I see people make is assuming that AI is inherently neutral. It’s not. It’s a reflection of the data it’s trained on and the biases of its creators. We need to be critically aware of these biases and take steps to mitigate them.

The Future of Fighting Fires (and Crime) in the Age of AI

So, where do we go from here? Well, one thing is clear: we need to have a serious conversation about the ethical implications of AI. We need to develop clear guidelines and regulations to prevent its misuse. And we need to equip law enforcement with the tools and training they need to investigate AI-related crimes effectively.

But there’s also a potential upside. AI can also be used to fight wildfires . Imagine AI-powered drones that can detect fires early, predict their spread, and even assist in firefighting efforts. Imagine AI algorithms that can analyze vast amounts of data to identify potential arson hotspots or predict criminal behavior. It’s a double-edged sword, but with careful planning and responsible implementation, we can harness the power of AI for good.

And what about that Florida man? Well, the investigation is still ongoing. But the fact that ChatGPT was even mentioned in connection with the case should serve as a wake-up call. The digital world is evolving at an exponential rate, and we need to be prepared for the challenges – and opportunities – that lie ahead. I think it’s safe to say that this situation, a crime involving artificial intelligence, is sure to develop further, so stay tuned. Remember though, as per the guidelines mentioned in the information bulletin , always rely on official sources for accurate information.

Don’t be afraid, though; this should all be taken with a grain of salt. Stay informed, stay skeptical, and always question what you read online. And maybe, just maybe, avoid taking fire-starting advice from robots. Just a thought.

FAQ | Palisades Fire & The AI Angle

Was the Florida man actually using ChatGPT to start the fire?

The investigation is still ongoing, and the exact role of ChatGPT, if any, is still unclear. Early reports suggested a possible connection, but it’s important to rely on official sources for accurate information.

Could ChatGPT be used to help someone commit arson?

Potentially, yes. AI can be used to research various topics, including criminal activities. However, the responsibility for committing a crime always lies with the individual.

Are there laws against using AI for illegal purposes?

Existing laws against arson and other crimes would apply, regardless of whether AI was used in the commission of the crime. New laws specifically addressing AI-related crimes may be needed in the future.

How can we prevent AI from being used for criminal activities?

Education, regulation, and ethical guidelines are crucial. We need to raise awareness about the potential misuse of AI and develop responsible AI practices. Also, legal safeguards are important.

What if I see someone using AI in a suspicious way?

Report it to the appropriate authorities. If you suspect someone is planning to commit a crime, contact your local law enforcement agency. But remember, do not be quick to rush to judgement.

How do I avoid spreading misinformation about this?

Stick to credible news sources. Double check that a source is reliable before sharing any information. Also, don’t be afraid to admit that you do not know, and need to do more research.

I initially thought this was straightforward, but then I realized how easy it is to spread fake news nowadays.

And that’s the story – for now. It’s a wild one, right? But it speaks to a bigger truth: we’re living in a world where technology is rapidly changing, and we need to be prepared for the unexpected. As society advances, we need to be responsible. One thing you absolutely must double-check, according to the latest circular on the official NTA website (csirnet.nta.ac.in) , is the sources from which you receive your information. Remember though, always rely on official sources for accurate information.

This brings to mind other issues as well. We can see so many instances of the digital world interacting with the real world. This will only continue to develop in the future.