Ever wondered how scientists predict which enzyme will bind to which substrate? It’s not just about luck or endless lab experiments. It’s about leveraging the power of algorithms – specifically, something called Graph Neural Networks (GNNs) . Now, I know what you might be thinking: “Graphs? Neural Networks? Sounds complicated!” And let’s be honest, it can be. But stick with me, because this technology is revolutionizing fields from drug discovery to materials science.

The real question isn’t can we use GNNs; it’s why are they so effective for this particular task? What makes them better than the traditional methods scientists have been using for decades? The answer lies in the inherent structure of biological data – and the ability of GNNs to understand it.

Why Graph Neural Networks Excel in Predicting Enzyme Specificity

Traditional machine learning models often struggle with biological data because they treat molecules and proteins as flat, featureless entities. But in reality, these things are complex networks of atoms and bonds. Imagine trying to understand a city by just looking at a list of street names – you’d miss the crucial relationships between them, the flow of traffic, and the overall layout. That’s where graph-based learning comes in.

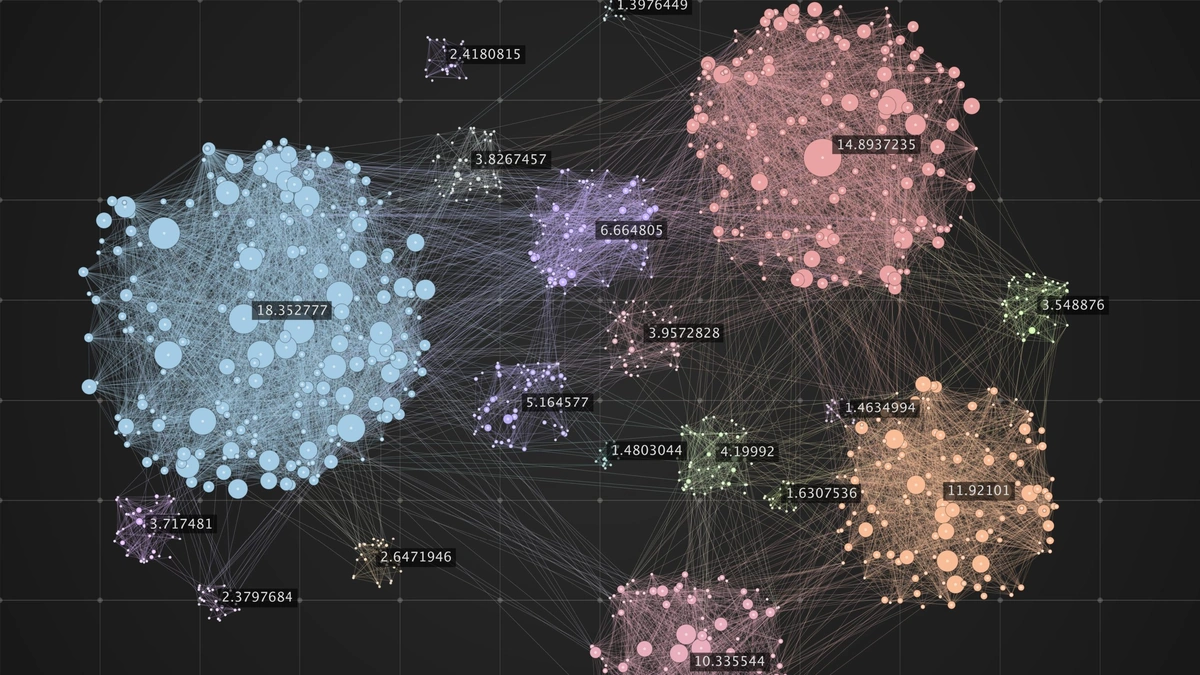

GNNs, unlike other algorithms, are designed to operate directly on graph-structured data. They consider each atom in a molecule (or each amino acid in a protein) as a node in a graph, and the bonds between them as edges. Then, they use a process called “message passing” to learn about the local neighborhood of each node. This process aggregates information from neighboring nodes, iteratively updating each node’s representation with knowledge of its surroundings. This is why graph neural networks are so good at what they do. What fascinates me is how this allows the network to capture the intricate spatial arrangements and chemical interactions that govern enzyme-substrate binding – something other methods often overlook.

Think of it like this: instead of just knowing the ingredients in a dish (the individual atoms), GNNs understand how those ingredients are arranged and interact with each other to create the final flavor (the overall binding affinity). This nuanced understanding is why they’re able to make such accurate predictions about enzyme substrate interactions .

Breaking Down the Process | How GNNs Predict Binding

So, how does this magic actually work? Let’s break it down into a few key steps. First, we need to represent the enzyme and the substrate as graphs. This involves identifying the atoms and bonds, and assigning them meaningful features. For example, we might encode information about the atom type (carbon, nitrogen, oxygen, etc.), its charge, and its connectivity.

Next comes the heart of the GNN: the message-passing layers. These layers iteratively update the node representations by aggregating information from their neighbors. Each node “listens” to its neighbors, learns about their properties, and incorporates that knowledge into its own representation. This process is repeated multiple times, allowing information to propagate through the entire graph and capture long-range dependencies.

Finally, after several rounds of message passing, the GNN outputs a prediction about the binding affinity between the enzyme and the substrate. This prediction is based on the final node representations, which encode all the relevant information about the molecular structures and their interactions. Deep learning models are used to accomplish this. Let me rephrase that for clarity: The model generates a score indicating how likely the enzyme and substrate are to bind – essentially predicting whether the reaction will occur.

The Impact on Drug Discovery and Beyond

Okay, so GNNs can predict enzyme-substrate specificity. But why should you care? Well, consider the field of drug discovery. Identifying the right drug candidate often involves screening vast libraries of molecules to find those that bind to a specific target enzyme. This process is incredibly time-consuming and expensive. Computational methods like GNNs can dramatically accelerate this process by predicting which molecules are most likely to bind, reducing the number of experiments needed, and saving valuable resources.

But the applications don’t stop there. GNNs are also being used to design new enzymes with specific properties, to understand metabolic pathways, and even to predict the behavior of complex biological systems. And, as the quality of chemical compound data sets improves, so will the models built on them. What fascinates me is the potential for these technologies to transform our understanding of biology and revolutionize fields like medicine and biotechnology. Another good application is in protein-ligand binding .

Challenges and Future Directions

While GNNs have shown remarkable promise, they’re not without their challenges. One major hurdle is the availability of high-quality training data. GNNs, like any machine learning model, require large amounts of labeled data to learn effectively. And in the biological domain, obtaining such data can be difficult and expensive.

Another challenge is the interpretability of GNNs. While they can make accurate predictions, it’s often difficult to understand why they made those predictions. This lack of interpretability can be a barrier to adoption, especially in fields like drug discovery where understanding the underlying mechanisms is crucial.

However, researchers are actively working to address these challenges. New methods are being developed to improve the interpretability of GNNs, and new datasets are being generated to train them. And, as computational power increases, we can expect to see even more sophisticated GNN architectures emerge, capable of tackling even more complex biological problems. According to the latest research, new machine learning techniques will improve speed and accuracy.

But, So, where does all this leave us? Graph Neural Networks are proving to be powerful tools for predicting enzyme-substrate specificity and unlocking new possibilities in drug discovery, materials science, and beyond. As the technology continues to evolve, we can expect to see even more exciting applications emerge – transforming our understanding of the world around us. The importance of these networks will only increase.

FAQ

What exactly is enzyme substrate specificity?

It refers to the ability of an enzyme to bind to a specific substrate, kind of like a lock and key mechanism in biochemistry.

How do GNNs differ from other machine learning approaches?

GNNs are uniquely designed to handle graph-structured data, making them ideal for modeling molecules and proteins.

What are some potential limitations of using GNNs in this context?

The availability of high-quality data and the interpretability of the models are two key challenges.

Are there any open-source tools available for working with GNNs?

Yes, several libraries like PyTorch Geometric and Deep Graph Library (DGL) offer resources for developing and training GNNs.

Can GNNs be used to predict other types of molecular interactions?

Absolutely! They’re versatile and can be applied to various prediction tasks in chemistry and biology.

What kind of hardware is needed to train these networks?

While smaller networks can be trained on CPUs, larger models often benefit from GPUs for faster computation.